What is machine learning?

The terms artificial intelligence (AI) and machine learning (ML) are closely related but not used interchangeably. AI tends to be used primarily to create a machine or program that can think, act and interact like a human. To achieve this primary goal, computers can be programmed to have the learning potential to solve a particular task better than the person who wrote the program. This implementation of AI is defined as ML and is one of the possible manifestations of AI.

ML involves the development of algorithms and techniques that recognize patterns in data sets based on prior learning. Based on the way of “learning”, a distinction is made between supervised, unsupervised and reinforcement learning as well as various mixed forms. The patterns and interrelationships learned can then be used, for example, to classify or cluster unknown data sets.

The history and major events in AI and ML research began in the 1950s and 1980s, respectively, and include IBM’s Deep Blue supercomputer, which defeated world chess champion Garri Kasparov in a six-point match in 1997. Driven by performance gains in the underlying computing hardware, increasing data availability and current trends such as cloud computing, modern ML applications have the potential to create a wide range of economic benefits for all industries (Kashyap et al., 2017; Larrañaga et al., 2018).

How Machine Learning can provide competitive advantages in the financial sector

It has become increasingly apparent in recent years that ML can provide competitive advantages by improving predictions, optimizing the allocation of operational resources and personalizing service delivery. Especially in data-driven application domains with a lot of transactional data, ML can make a significant contribution to the efficient processing and evaluation of the relevant data. In a previous post, we already identified three promising application areas for ML in the financial sector:

- Impressing customers by using AI

- Accelerating and simplifying processes

- Managing institutions more effectively by means of AI

BankingHub-Newsletter

Analyses, articles and interviews about trends & innovation in banking delivered right to your inbox every 2-3 weeks

"(Required)" indicates required fields

What information security risks can erode by Machine Learning?

Information security risks can erode value added by ML

Such positive potential promotes the transition of ML from a laboratory curiosity to a practical technology that is widely commercialized and deeply embedded in enterprise information systems. While the practical focus in recent years has tended to be on rapid development and the potential positive efficiency and innovation effects, it is now becoming increasingly clear that ML also poses significant risks (Heinrich et al., 2020, Rosenberg et al., 2021). These risks range from the data source, data protection and data sovereignty to adversarial attacks on the ML model itself. According to a recent report by Gartner, 30% of cyberattacks in 2022 will be aimed at data poisoning (manipulation of the training data set), ML model theft or manipulation of input data with the intention of misclassifying a particular input, for example.

This assessment is not merely speculation, as demonstrated by known attacks on ML applications (e.g. the Twitter chatbot scandal in 2016). These attacks are particularly significant when they target critical ML applications (e.g. processing of personal data), which are increasingly used by financial institutions, too. It is with good reason that the European Commission has drafted a law to regulate the use of ML and protect users’ data.

The legislative initiative envisages four classes of AI applications:

- Unacceptable risk: AI applications that pose a threat to the safety, livelihoods and rights of EU citizens are to be banned.

- High risk: AI applications that have adverse effects on people’s safety and fundamental rights are considered high-risk. A set of binding requirements (including a compliance and risk assessment) is to be introduced for these high-risk systems (regulation).

- Limited risk: these AI applications are to be subject to a limited number of obligations (e.g. transparency).

- Minimal risk: for all other AI applications, development and deployment in the EU is to be enabled without additional legal obligations beyond existing legislation.

It will be particularly interesting for financial institutions if, under the current draft legislation, ML applications such as credit assessment of credit scoring are classified as high-risk and risk-reducing transparency and security obligations are demanded. In serious cases, violations can result in fines of up to EUR 30 million or 6% of the company’s global turnover.

AI systems must be made fit for the future to open up new potential:

security & transparency

Based on current cybersecurity threats, increasingly sophisticated attack methods and tightening regulatory requirements, raising awareness of ML security issues is essential. Even today, the information security risk to ML applications is real, and practitioners are facing this challenge.

In order to master these problems in the long term and achieve a strategic competitive advantage through the use of modern ML systems, companies should start building up the necessary skills and implementing an AI risk strategy now. In this context, companies should identify …

- who can attack their systems and how (threat situation),

- how resilient their systems are against such a threat and

- if the resulting risk can be accepted or if risk-minimizing measures must be taken.

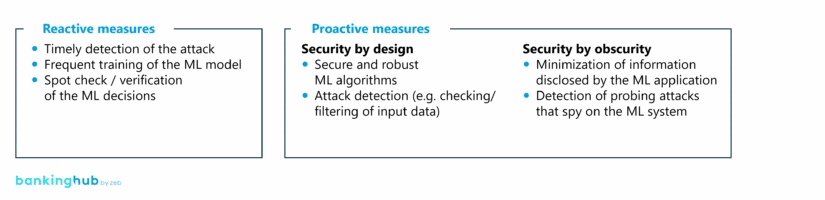

Secure and transparent are the key words in the context of ML. Transparency means that ML-based decisions must be traceable. ML applications must not act as a completely inscrutable black box. Moderate, application-based regulation and a thoughtful selection and combination of application components should address this transparency goal. In order to achieve a desired level of security for ML applications, there are various additional options which are indicated in the following overview.

The security of (customer) data and its

adequate use in ML applications must be ensured not only for regulatory

reasons. If the three information security protection goals of

“confidentiality”, “integrity” and “availability” are successfully implemented

across the entire IT landscape, this is just as crucial for customer trust which

is the basis for any financial transaction. In future, this will offer the

potential of increased user acceptance and thus the opportunity for companies

to differentiate themselves from competitors in the market through new,

innovative ML applications.

The future of banking

Winner of our May 2022 student writing competition

2nd place

Yannick Gröppner

„Blockchain – the Future of Banking“